Vision DataModules¶

The following are pre-built datamodules for computer-vision.

Supervised learning¶

These are standard vision datasets with the train, test, val splits pre-generated in DataLoaders with the standard transforms (and Normalization) values

BinaryMNIST¶

-

class

pl_bolts.datamodules.binary_mnist_datamodule.BinaryMNISTDataModule(data_dir=None, val_split=0.2, num_workers=16, normalize=False, batch_size=32, seed=42, shuffle=False, pin_memory=False, drop_last=False, *args, **kwargs)[source] Bases:

pytorch_lightning.

- Specs:

10 classes (1 per digit)

Each image is (1 x 28 x 28)

Binary MNIST, train, val, test splits and transforms

Transforms:

mnist_transforms = transform_lib.Compose([ transform_lib.ToTensor() ])

Example:

from pl_bolts.datamodules import BinaryMNISTDataModule dm = BinaryMNISTDataModule('.') model = LitModel() Trainer().fit(model, datamodule=dm)

- Parameters

val_split¶ (

Union[int,float]) – Percent (float) or number (int) of samples to use for the validation splitnum_workers¶ (

int) – How many workers to use for loading dataseed¶ (

int) – Random seed to be used for train/val/test splitsshuffle¶ (

bool) – If true shuffles the train data every epochpin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning them

CityScapes¶

-

class

pl_bolts.datamodules.cityscapes_datamodule.CityscapesDataModule(data_dir, quality_mode='fine', target_type='instance', num_workers=16, batch_size=32, seed=42, shuffle=False, pin_memory=False, drop_last=False, *args, **kwargs)[source] Bases:

pytorch_lightning.

Standard Cityscapes, train, val, test splits and transforms

- Note: You need to have downloaded the Cityscapes dataset first and provide the path to where it is saved.

You can download the dataset here: https://www.cityscapes-dataset.com/

- Specs:

30 classes (road, person, sidewalk, etc…)

(image, target) - image dims: (3 x 1024 x 2048), target dims: (1024 x 2048)

Transforms:

transforms = transform_lib.Compose([ transform_lib.ToTensor(), transform_lib.Normalize( mean=[0.28689554, 0.32513303, 0.28389177], std=[0.18696375, 0.19017339, 0.18720214] ) ])

Example:

from pl_bolts.datamodules import CityscapesDataModule dm = CityscapesDataModule(PATH) model = LitModel() Trainer().fit(model, datamodule=dm)

Or you can set your own transforms

Example:

dm.train_transforms = ... dm.test_transforms = ... dm.val_transforms = ... dm.target_transforms = ...

- Parameters

data_dir¶ (

str) – where to load the data from path, i.e. where directory leftImg8bit and gtFine or gtCoarse are locatedquality_mode¶ (

str) – the quality mode to use, either ‘fine’ or ‘coarse’target_type¶ (

str) – targets to use, either ‘instance’ or ‘semantic’num_workers¶ (

int) – how many workers to use for loading databatch_size¶ (

int) – number of examples per training/eval stepseed¶ (

int) – random seed to be used for train/val/test splitspin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning them

-

test_dataloader()[source] Cityscapes test set

- Return type

-

train_dataloader()[source] Cityscapes train set

- Return type

-

val_dataloader()[source] Cityscapes val set

- Return type

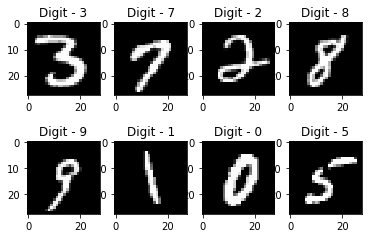

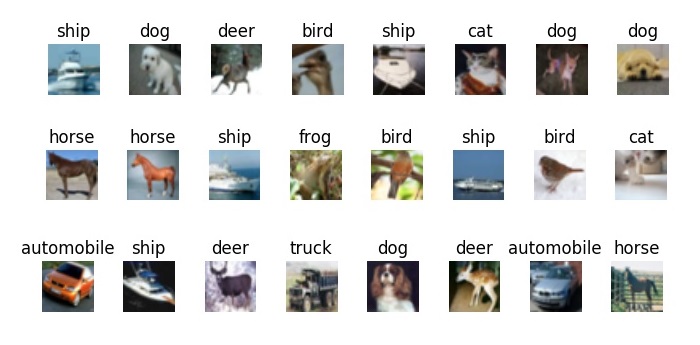

CIFAR-10¶

-

class

pl_bolts.datamodules.cifar10_datamodule.CIFAR10DataModule(data_dir=None, val_split=0.2, num_workers=16, normalize=False, batch_size=32, seed=42, shuffle=False, pin_memory=False, drop_last=False, *args, **kwargs)[source] Bases:

pytorch_lightning.

- Specs:

10 classes (1 per class)

Each image is (3 x 32 x 32)

Standard CIFAR10, train, val, test splits and transforms

Transforms:

mnist_transforms = transform_lib.Compose([ transform_lib.ToTensor(), transforms.Normalize( mean=[x / 255.0 for x in [125.3, 123.0, 113.9]], std=[x / 255.0 for x in [63.0, 62.1, 66.7]] ) ])

Example:

from pl_bolts.datamodules import CIFAR10DataModule dm = CIFAR10DataModule(PATH) model = LitModel() Trainer().fit(model, datamodule=dm)

Or you can set your own transforms

Example:

dm.train_transforms = ... dm.test_transforms = ... dm.val_transforms = ...

- Parameters

val_split¶ (

Union[int,float]) – Percent (float) or number (int) of samples to use for the validation splitnum_workers¶ (

int) – How many workers to use for loading dataseed¶ (

int) – Random seed to be used for train/val/test splitsshuffle¶ (

bool) – If true shuffles the train data every epochpin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning them

FashionMNIST¶

-

class

pl_bolts.datamodules.fashion_mnist_datamodule.FashionMNISTDataModule(data_dir=None, val_split=0.2, num_workers=16, normalize=False, batch_size=32, seed=42, shuffle=False, pin_memory=False, drop_last=False, *args, **kwargs)[source] Bases:

pytorch_lightning.

- Specs:

10 classes (1 per type)

Each image is (1 x 28 x 28)

Standard FashionMNIST, train, val, test splits and transforms

Transforms:

mnist_transforms = transform_lib.Compose([ transform_lib.ToTensor() ])

Example:

from pl_bolts.datamodules import FashionMNISTDataModule dm = FashionMNISTDataModule('.') model = LitModel() Trainer().fit(model, datamodule=dm)

- Parameters

val_split¶ (

Union[int,float]) – Percent (float) or number (int) of samples to use for the validation splitnum_workers¶ (

int) – How many workers to use for loading dataseed¶ (

int) – Random seed to be used for train/val/test splitsshuffle¶ (

bool) – If true shuffles the train data every epochpin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning them

Imagenet¶

-

class

pl_bolts.datamodules.imagenet_datamodule.ImagenetDataModule(data_dir, meta_dir=None, num_imgs_per_val_class=50, image_size=224, num_workers=16, batch_size=32, shuffle=False, pin_memory=False, drop_last=False, *args, **kwargs)[source] Bases:

pytorch_lightning.

- Specs:

1000 classes

Each image is (3 x varies x varies) (here we default to 3 x 224 x 224)

Imagenet train, val and test dataloaders.

The train set is the imagenet train.

The val set is taken from the train set with num_imgs_per_val_class images per class. For example if num_imgs_per_val_class=2 then there will be 2,000 images in the validation set.

The test set is the official imagenet validation set.

Example:

from pl_bolts.datamodules import ImagenetDataModule dm = ImagenetDataModule(IMAGENET_PATH) model = LitModel() Trainer().fit(model, datamodule=dm)

- Parameters

-

prepare_data()[source] This method already assumes you have imagenet2012 downloaded. It validates the data using the meta.bin.

Warning

Please download imagenet on your own first.

- Return type

-

test_dataloader()[source] Uses the validation split of imagenet2012 for testing

- Return type

-

train_dataloader()[source] Uses the train split of imagenet2012 and puts away a portion of it for the validation split

- Return type

-

train_transform()[source] The standard imagenet transforms

transform_lib.Compose([ transform_lib.RandomResizedCrop(self.image_size), transform_lib.RandomHorizontalFlip(), transform_lib.ToTensor(), transform_lib.Normalize( mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225] ), ])

- Return type

-

val_dataloader()[source] Uses the part of the train split of imagenet2012 that was not used for training via num_imgs_per_val_class

- Parameters

- Return type

-

val_transform()[source] The standard imagenet transforms for validation

transform_lib.Compose([ transform_lib.Resize(self.image_size + 32), transform_lib.CenterCrop(self.image_size), transform_lib.ToTensor(), transform_lib.Normalize( mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225] ), ])

- Return type

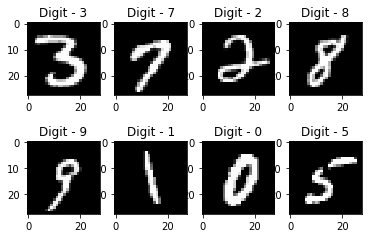

MNIST¶

-

class

pl_bolts.datamodules.mnist_datamodule.MNISTDataModule(data_dir=None, val_split=0.2, num_workers=16, normalize=False, batch_size=32, seed=42, shuffle=False, pin_memory=False, drop_last=False, *args, **kwargs)[source] Bases:

pytorch_lightning.

- Specs:

10 classes (1 per digit)

Each image is (1 x 28 x 28)

Standard MNIST, train, val, test splits and transforms

Transforms:

mnist_transforms = transform_lib.Compose([ transform_lib.ToTensor() ])

Example:

from pl_bolts.datamodules import MNISTDataModule dm = MNISTDataModule('.') model = LitModel() Trainer().fit(model, datamodule=dm)

- Parameters

val_split¶ (

Union[int,float]) – Percent (float) or number (int) of samples to use for the validation splitnum_workers¶ (

int) – How many workers to use for loading dataseed¶ (

int) – Random seed to be used for train/val/test splitsshuffle¶ (

bool) – If true shuffles the train data every epochpin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning them

Semi-supervised learning¶

The following datasets have support for unlabeled training and semi-supervised learning where only a few examples are labeled.

Imagenet (ssl)¶

-

class

pl_bolts.datamodules.ssl_imagenet_datamodule.SSLImagenetDataModule(data_dir, meta_dir=None, num_workers=16, batch_size=32, shuffle=False, pin_memory=False, drop_last=False, *args, **kwargs)[source] Bases:

pytorch_lightning.

STL-10¶

-

class

pl_bolts.datamodules.stl10_datamodule.STL10DataModule(data_dir=None, unlabeled_val_split=5000, train_val_split=500, num_workers=16, batch_size=32, seed=42, shuffle=False, pin_memory=False, drop_last=False, *args, **kwargs)[source] Bases:

pytorch_lightning.

- Specs:

10 classes (1 per type)

Each image is (3 x 96 x 96)

Standard STL-10, train, val, test splits and transforms. STL-10 has support for doing validation splits on the labeled or unlabeled splits

Transforms:

mnist_transforms = transform_lib.Compose([ transform_lib.ToTensor(), transforms.Normalize( mean=(0.43, 0.42, 0.39), std=(0.27, 0.26, 0.27) ) ])

Example:

from pl_bolts.datamodules import STL10DataModule dm = STL10DataModule(PATH) model = LitModel() Trainer().fit(model, datamodule=dm)

- Parameters

unlabeled_val_split¶ (

int) – how many images from the unlabeled training split to use for validationtrain_val_split¶ (

int) – how many images from the labeled training split to use for validationnum_workers¶ (

int) – how many workers to use for loading dataseed¶ (

int) – random seed to be used for train/val/test splitspin_memory¶ (

bool) – If true, the data loader will copy Tensors into CUDA pinned memory before returning them

-

test_dataloader()[source] Loads the test split of STL10

- Parameters

- Return type

-

train_dataloader()[source] Loads the ‘unlabeled’ split minus a portion set aside for validation via unlabeled_val_split.

- Return type

-

train_dataloader_mixed()[source] Loads a portion of the ‘unlabeled’ training data and ‘train’ (labeled) data. both portions have a subset removed for validation via unlabeled_val_split and train_val_split

- Parameters

- Return type

-

val_dataloader()[source] Loads a portion of the ‘unlabeled’ training data set aside for validation The val dataset = (unlabeled - train_val_split)

- Parameters

- Return type

-

val_dataloader_mixed()[source] Loads a portion of the ‘unlabeled’ training data set aside for validation along with the portion of the ‘train’ dataset to be used for validation

unlabeled_val = (unlabeled - train_val_split)

labeled_val = (train- train_val_split)

full_val = unlabeled_val + labeled_val

- Parameters

- Return type